Quote

Hi @all, Turn back to work in provisioning Infrastructure. You know about Infrastructure as Code is truly insane, in currently situation, DevOps must be known about once of tools for doing stuff, with me, I totally repeat time to time about Terraform. But todays, I will expose what actual think when I try to work Terraform in daily. Let’s digest

Install and setup environment for Terraform

Documentation: 🔗Click to the link

Info

What is Terraform?

Terraform is an infrastructure as code tool that lets you build, change, and version infrastructure safely and efficiently. This includes low-level components like compute instances, storage, and networking; and high-level components like DNS entries and SaaS features.

Info

How does Terraform work?

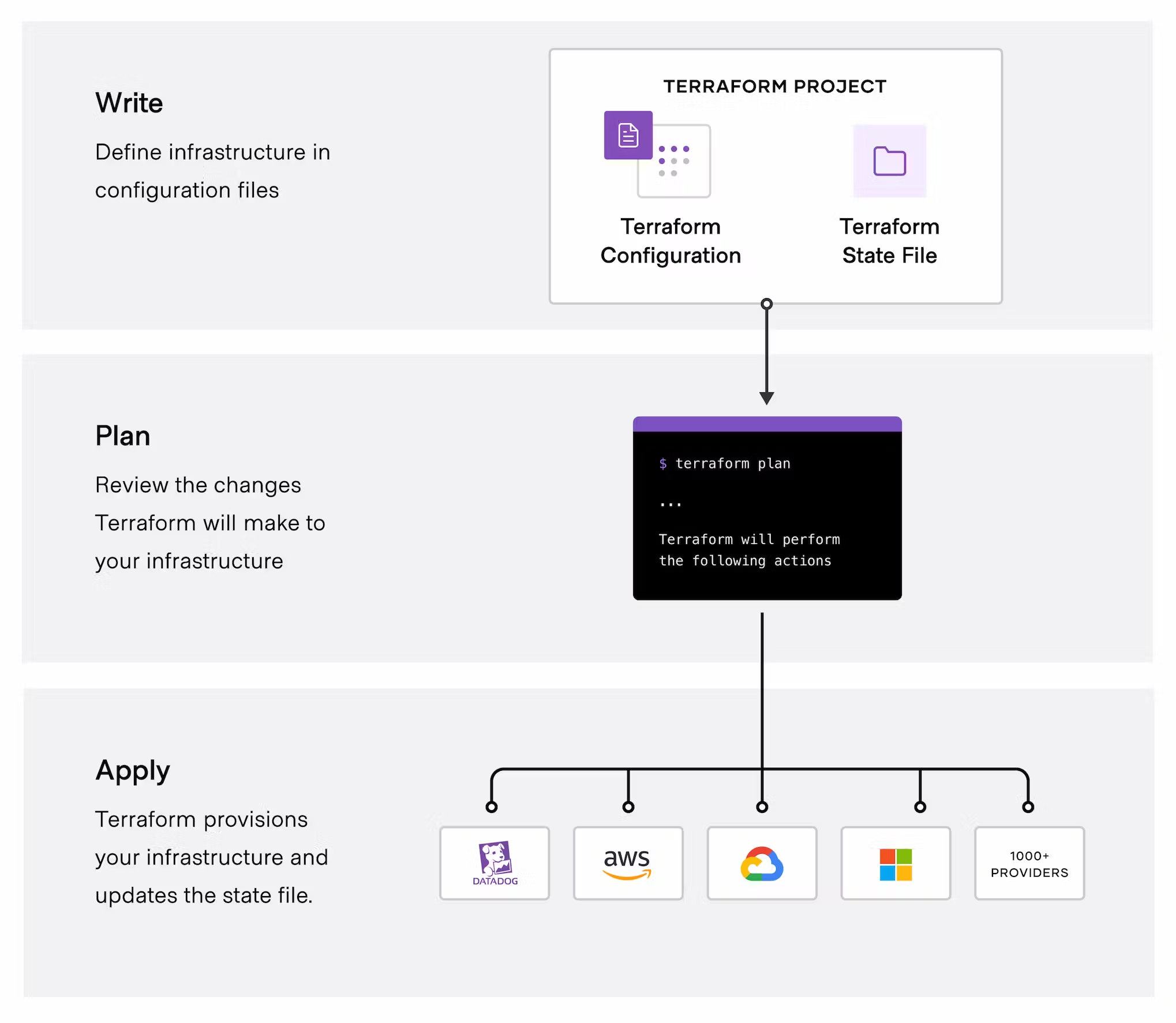

The core Terraform workflow consists of three stages:

- Write: You define resources, which may be across multiple cloud providers and services. For example, you might create a configuration to deploy an application on virtual machines in a Virtual Private Cloud (VPC) network with security groups and a load balancer.

- Plan: Terraform creates an execution plan describing the infrastructure it will create, update, or destroy based on the existing infrastructure and your configuration.

- Apply: On approval, Terraform performs the proposed operations in the correct order, respecting any resource dependencies. For example, if you update the properties of a VPC and change the number of virtual machines in that VPC, Terraform will recreate the VPC before scaling the virtual machines.

To installing Terraform, just need binary for setting whole thing. Explore more with installing guide, for example

wget -O- https://apt.releases.hashicorp.com/gpg | sudo gpg --dearmor -o /usr/share/keyrings/hashicorp-archive-keyring.gpg

echo "deb [signed-by=/usr/share/keyrings/hashicorp-archive-keyring.gpg] https://apt.releases.hashicorp.com $(lsb_release -cs) main" | sudo tee /etc/apt/sources.list.d/hashicorp.list

sudo apt update && sudo apt install terraformNote

If you use Windows OS, please access the documentation to knowing more information: Terrafrom - Windows Version

Validate and check version of Terraform

$ terraform --version

Terraform v1.6.2

on linux_amd64

Your version of Terraform is out of date! The latest version

is 1.7.5. You can update by downloading from https://www.terraform.io/downloads.htmlWhat does Terraform do ?

Info

Terraform is submit role for my daily work, including

- Manage any infrastructure

- Track your infrastructure

- Automate changes

- Standardize configurations

- Collaborate

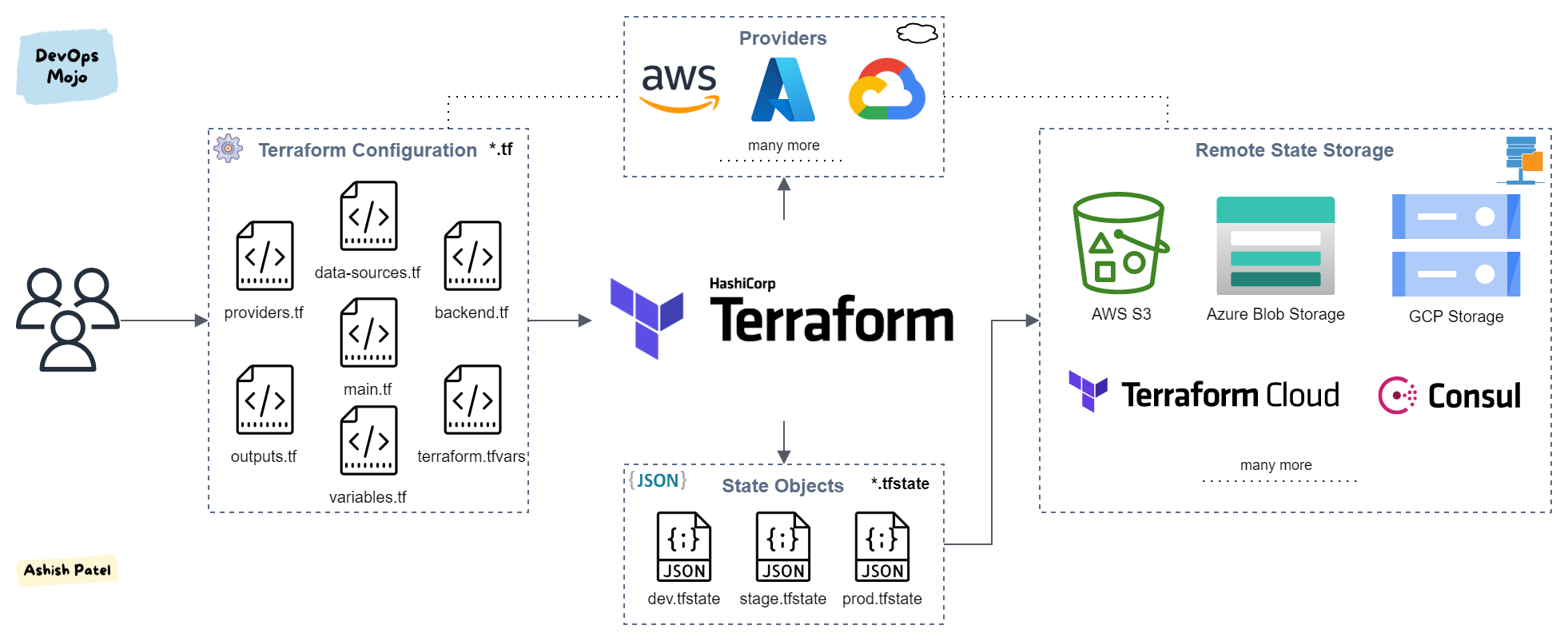

Source: DevOps Mojo

Info

All of process when apply Infrastructure as Code with

Terraformfrominit⇒applywill store injsonfile called*.tfstate.The state file contains full details of resources in our terraform code. When you modify something on your code and apply it on cloud, terraform will look into the state file, and compare the changes made in the code from that state file and the changes to the infrastructure based on the state file.

Question

*.tfstatefile have important role on infrastructure life cycle, therefore, keep it safety will become priority. Hashicorp has offered for us about feature which can store the file

- Remote State

- Delegation and Teamwork

- Locking and Teamwork

More detail about: https://developer.hashicorp.com/terraform/language/state/remote

Powerful of Terraform

Providers

Info

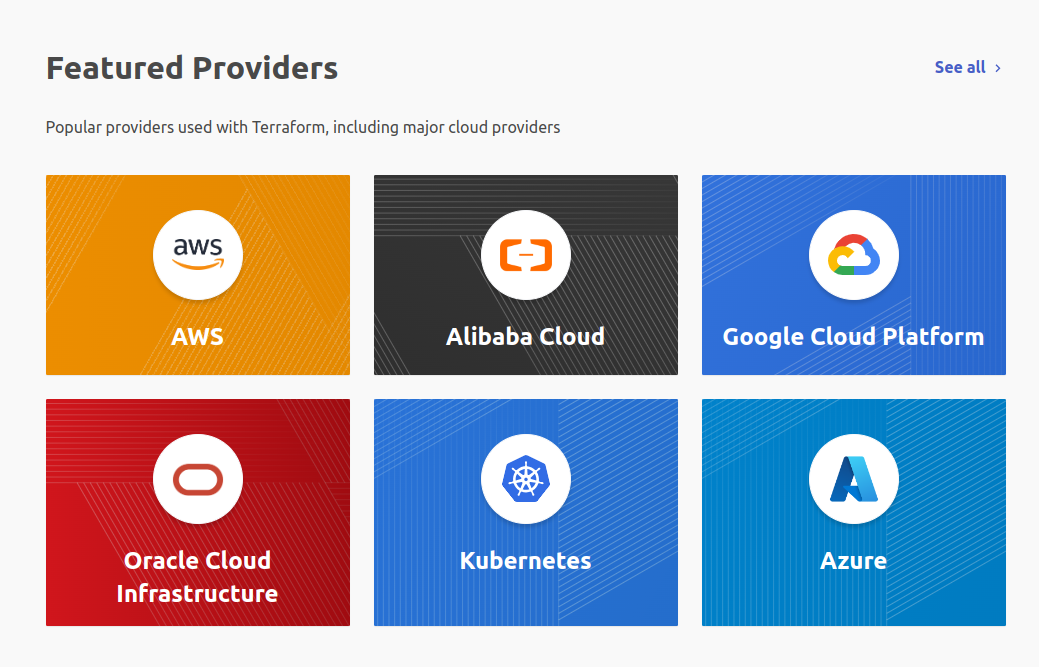

With

Terraform, you can have a huge community with tons of providers opensource, cloud, SaaS.Platforms Terraform about their providers in Terraform Registry

Documentation

- Support

terraform-docswhich generate documentation in multiple type likemarkdown json xml. Try this at terraform-docs-user-guide - Those providers documentation is very powerful, clear and positive for getting started. Checkout what my configuration with

terraform-docsat Configuration CheatSheet > terraform-docs

Integration

- Can integrate with multiple platform and plugin like

Code Scanning, CI/CD, Observability/Monitoring, Security, Audit, … - Tons of opensource can help you work with Terraform, It make this to standard for Infrastructure as Code

Structure Terraform as Code

Related articles

- The Right way to structure Terraform Project!

- Terraform Files – How to Structure a Terraform Project

Pros of structure your Terraform

Base on: 10 Essential Aspects of a Terraform Project

- Project Structure: Maintain a standardized format for files and directories across all projects, ensuring consistency in organization.

- Modularization: Utilize modules to enhance code abstraction and increase code reusability.

- Naming Conventions: Implement naming conventions for configuration objects, such as variables, modules, and files, to ensure uniformity in resource names.

- Code Formatting and Style: Employ consistent coding practices to keep your code clean, easy to read, understand, and maintain.

- Variables Declaration and Assignment: Avoid hard-coding values to ensure code reusability and organization, especially for future modifications.

- Module Outputs: Effectively integrate modules and the resources contained within them.

- Data Sources: Leverage data sources to access resources defined outside of the Terraform project.

- Remote State Management: Facilitate collaboration within teams and safeguard the state file from accidental deletion.

- Project Blast Radius: Maintain an optimal project size, grouping related resources together while striking a balance between coupling and cohesion.

- Version Control: Implement code version control to efficiently manage different versions of the code, facilitating collaboration and code sharing within teams.

Structuring Terraform Project

But general purpose, we need to manage huge system with multiple environments. Terraform offer for us about separating main components into modules and import them for specify environments. Those one could be

projectname/

|

|-- environment/

| |

| |-- prod/

| | |

| | |-- region1/

| | | |

| | | |-- provider.tf

| | | |-- version.tf

| | | |-- backend.tf

| | | |-- main.tf

| | | |-- variables.tf

| | | |-- terraform.tfvars

| | | |-- outputs.tf

| | |

| | |-- region2/

| | | |

| | | |-- ...

| |

| |-- dev/

| | |

| | |-- ...

| |

| |-- stage/

| |

| |-- ...

|

|-- modules/

| |

| |-- network/

| | |

| | |-- provider.tf

| | |-- network.tf

| | |-- loadbalancer.tf

| | |-- variables.tf

| | |-- variables-local.tf

| | |-- outputs.tf

| | |-- README.md

| | |-- examples/

| | |-- docs/

| |

| |-- vm/

| |

| |-- ...

|

|-- ...Understanding Terraform structure

Info

Reference above, Terraform structure will separate to 2 part

- Main directory: Where we execute the Terraform commands and the code execution and deployment start

- Modules Directory: Modules represent a fundamental aspect of Terraform that empowers the creation of reusable infrastructure deployment code.

Main Directory

Main Directory is managed base on ENV, which mean we use same structure for multiple ENV but changing parameters and configuration

provider.tf: Specifies the cloud or SaaS providers utilized.main.tf: Serves as the central Terraform file from which modules are invoked.variables.tf: Contains variable declarations.outputs.tf: Includes output value definitions.terraform.tfvars: Stores variable definitions and assignments.

Notice

In some case,

terraform.tfvarsis not using, instead of we use another way to serve Terraform variables in pipeline, that one isenvironment variablesnamedTF_VAR. Explore more: Terraform - Environment Variables

For example:

export TF_VAR_region=us-west-1

export TF_VAR_ami=ami-049d8641

export TF_VAR_alist='[1,2,3]'

export TF_VAR_amap='{ foo = "bar", baz = "qux" }'Modules Directory

Modules Directory, same as main directory but more particular like

| |-- network/

| | |

| | |-- provider.tf

| | |-- network.tf

| | |-- loadbalancer.tf

| | |-- variables.tf

| | |-- outputs.tfprovider.tf: Specifies the provider employed for resources within the module.network.tforloadbalancer.tf: Specifies multiple things belongs to a modulevariables.tf: Contains declarations for module-specific variables.outputs.tf: Comprises definitions for output values.

Connect Main with module directory

Module directory will be defined in Main module like

module "elasticsearch" {

source = "../../modules/elasticsearch"

es_version = var.elasticsearch_version

node_count = var.elasticsearch_node_count

memory_request = var.elasticsearch_memory_request

memory_limit = var.elasticsearch_memory_limit

cpu_request = var.elasticsearch_cpu_request

cpu_limit = var.elasticsearch_cpu_limit

storage_size = var.elasticsearch_storage_size

remote_state = data.terraform_remote_state.azure.outputs

depends_on = [

module.common_resources

]

}Example represent the import of elasticsearch in module in main directory, it means when we apply main.tf, elasticsearch can be created.

For preventing error when import your module, concern about

- Set all of variables which be used by module, for example: using

var.*to get variables with defining invariables.tfof module - Set

depends_onfor purpose sequentially provisioning your service, It means, on some situation, another modules should be created first and that one would be used like conditional for creating your modules

Run the Terraform for provisioning your system

Info

In term of mine, Terraform will manage two part, like

- Azure: Use to create and manage Azure Cloud Service

- K8s: Use to create and manage services in Kubernetes

Azure

Providers: Using azurerm for provisioning all cloud service, *.tfstate will store in remote state hosted by Blob - Azure Storage Account

terraform {

required_providers {

azurerm = {

source = "hashicorp/azurerm"

version = "=3.85.0"

}

}

}

provider "azurerm" {

features {}

}

terraform {

backend "azurerm" {

resource_group_name = "xxxx"

storage_account_name = "xxxx"

container_name = "xxxx"

key = "xxxx"

subscription_id = "xxxx"

}

}Module is provisioned by azurerm like

- Resource group

- Vault

- AKS

- MSSQL

- PostgreSQL

- Storage Account, Azure Container, Azure File, …

Kubernetes

Providers: With K8s, we will use multiple provider for working with cluster

azurerm: To get credentials of cluster (Important !!!)helm: To usehelm-releasefor releasing helm chart into K8skubectl: To apply manifest workload, network, storage into K8sgrafana: To create alert for K8s system

terraform {

required_providers {

azurerm = {

source = "hashicorp/azurerm"

version = "=3.85.0"

}

kubernetes = {

source = "hashicorp/helm"

version = "2.12.1"

source = "hashicorp/kubernetes"

version = ">= 2.10.0"

}

kubectl = {

source = "gavinbunney/kubectl"

version = "=1.14.0"

}

grafana = {

source = "grafana/grafana"

version = "=2.9.0"

}

}

}

provider "azurerm" {

features {}

}

terraform {

backend "azurerm" {

resource_group_name = "xxxx"

storage_account_name = "xxxx"

container_name = "xxxx"

key = "xxxx"

subscription_id = "xxxx"

}

}

data "azurerm_kubernetes_cluster" "k8s" {

name = var.cluster_name

resource_group_name = var.resource_group_name

}

provider "kubernetes" {

host = data.azurerm_kubernetes_cluster.k8s.kube_config.0.host

client_certificate = base64decode(data.azurerm_kubernetes_cluster.k8s.kube_config.0.client_certificate)

client_key = base64decode(data.azurerm_kubernetes_cluster.k8s.kube_config.0.client_key)

cluster_ca_certificate = base64decode(data.azurerm_kubernetes_cluster.k8s.kube_config.0.cluster_ca_certificate)

}

provider "helm" {

kubernetes {

host = data.azurerm_kubernetes_cluster.k8s.kube_config.0.host

client_certificate = base64decode(data.azurerm_kubernetes_cluster.k8s.kube_config.0.client_certificate)

client_key = base64decode(data.azurerm_kubernetes_cluster.k8s.kube_config.0.client_key)

cluster_ca_certificate = base64decode(data.azurerm_kubernetes_cluster.k8s.kube_config.0.cluster_ca_certificate)

}

}

provider "kubectl" {

host = data.azurerm_kubernetes_cluster.k8s.kube_config.0.host

client_certificate = base64decode(data.azurerm_kubernetes_cluster.k8s.kube_config.0.client_certificate)

client_key = base64decode(data.azurerm_kubernetes_cluster.k8s.kube_config.0.client_key)

cluster_ca_certificate = base64decode(data.azurerm_kubernetes_cluster.k8s.kube_config.0.cluster_ca_certificate)

load_config_file = false

}

provider "grafana" {

url = "https://monitoring.example.xyz"

auth = var.grafana_auth

}

Module is provisioned on kubernetes, include

- WordPress Website: MySQL and WordPress

- Common resources: PVC, Secrets, Storage Class

- Elasticsearch

- Ingress Nginx

- Prometheus + Grafana

- Loki + Promtail

- MongoDB

- RabbitMQ

- Tempo

- Redis

Running Terraform with Terminal

For running this provisioning process, you need do step by step

- Create and validate module variables in main

variables.tffile, for example: Set default or get from environments - Change directory to main module with specify environment, run

terraform initfor prepare working directory ⇒xxxx.tfstatewill create on remote state - Run

terraform planfor take a look what resource is create or change before apply - Make sure control resources which create, destroy or change, run

terraform applyfor applying new infrastructure

Running Terraform with Azure Pipeline (Automation)

![]()

There is a lot of ways to automating your Terraform Workflow, and integrate Terraform into CI/CD tools such as Azure Pipelines, GitHub Actions or GitLab CI that legit useful

name: $(BuildDefinitionName)_$(date:yyyyMMdd)$(rev:.r)

trigger: none

pool: $(PoolName)

stages:

- stage: terraform_plan

jobs:

- job: "terraform_plan"

steps:

- task: TerraformInstaller@0

displayName: install terraform

inputs:

terraformVersion: '1.6.6'

- task: ms-devlabs.custom-terraform-tasks.custom-terraform-release-task.TerraformTaskV3@3

displayName: 'Terraform : init'

inputs:

workingDirectory: $(WorkingDirectory)

backendServiceArm: $(ServiceConnectionName)

backendAzureRmResourceGroupName: $(BackendAzureRmResourceGroupName)

backendAzureRmStorageAccountName: $(BackendAzureRmStorageAccountName)

backendAzureRmContainerName: $(BackendAzureRmContainerName)

backendAzureRmKey: $(BackendAzureRmKey)

- task: ms-devlabs.custom-terraform-tasks.custom-terraform-release-task.TerraformTaskV3@3

displayName: 'Terraform : plan'

inputs:

command: plan

workingDirectory: $(WorkingDirectory)

commandOptions: '-out=plan.tfplan -input=false'

environmentServiceNameAzureRM: $(ServiceConnectionName)

- task: PublishBuildArtifacts@1

displayName: 'Publish Artifact: plan'

inputs:

PathtoPublish: $(BaseDirectory)

ArtifactName: plan

- task: DeleteFiles@1

displayName: 'Remove unneeded files'

inputs:

contents: |

.terraform

plan.tfplan

- stage: terraform_apply

dependsOn: [terraform_plan]

condition: succeeded('terraform_plan')

jobs:

- deployment: terraform_apply

pool: $(PoolName)

# creates an environment if it doesn't exist

environment: $(EnvironmentName)

strategy:

runOnce:

deploy:

steps:

- checkout: none

- task: TerraformInstaller@0

displayName: install terraform

inputs:

terraformVersion: '1.6.6'

- task: ms-devlabs.custom-terraform-tasks.custom-terraform-release-task.TerraformTaskV3@3

displayName: 'Terraform : init'

inputs:

workingDirectory: $(WorkingDirectory)

backendServiceArm: $(ServiceConnectionName)

backendAzureRmResourceGroupName: $(BackendAzureRmResourceGroupName)

backendAzureRmStorageAccountName: $(BackendAzureRmStorageAccountName)

backendAzureRmContainerName: $(BackendAzureRmContainerName)

backendAzureRmKey: $(BackendAzureRmKey)

- task: ms-devlabs.custom-terraform-tasks.custom-terraform-release-task.TerraformTaskV3@3

displayName: 'Terraform : apply'

inputs:

command: apply

workingDirectory: $(WorkingDirectory)

commandOptions: 'plan.tfplan'

environmentServiceNameAzureRM: $(ServiceConnectionName)

To run terraform task, you need follow this documentation to get one for your Azure Pipeline platform at Terraform Task - Microsoft DevLabs

Pipeline will separate for two step

terraform_plan: Runinitandplan, which show change on your infrastructureterraform_apply: Base onterraform_plancondition to run this step, you need permit the permission to apply new change for infrastructure

Notice when run Terraform on Pipeline:

- Prepare your environment variables of terraform through pipeline variables

- Permit your permission on environment of pipeline

- Select and run the pipeline with expectation environment like dev, uat or prod

Example: Update the version AKS with Terraform

Note

You should update your

AKSversion withTerraformfor safety and consistent when upgrading in cloud. If you implement AKS or something related Azure Cloud, you can handle the version upgrade with Terraform with legit useful

- Check the version should be upgraded to your current cluster with

az-cli

az aks get-upgrades --resource-group <rg-name> --name <cluster-name> --output table

Name ResourceGroup MasterVersion Upgrades

------- --------------- --------------- ----------------------

default aks 1.27.7 1.27.9, 1.28.3, 1.28.5- After you decide your upgrade version, go to

./deploy/terraform/azure/env/<name-env>/variables.tf, findk8s_versionand change that to expectation version

variable "k8s_version" {

description = "Kubernetes version"

default = "1.27.7" --> "1.28.5"

}- On the first step, you need to make sure anything will not affected with your change, only

1change aboutaksversion. If not anything happen, you need approve permit to run next step - Validate your cluster version after completely (Waiting on 10-20 minute)

Conclusion

Success

On this topic, you can understand about IaC and Terraform with

- Install and setup Terraform for your environment

- What does Terraform do for your infrastructure ?

- Powerful of Terraform

- Structure of Terraform

- How can you perform provision with Terraform on your machine or pipeline ?

- Update your AKS version with Terraform