Can use volume with cronjobs

Purpose

This note will content the thing which finding on working progress with K8s. Just take note and link for resolving the problem. Find out detail if it has unique directory

Cronjobs --> Create Jobs (Trigger by scheduled) --> Pod : In this situation, Pod in K8s can used the volume and mount succeed when the script running. But if you applied it with pods, it will not, your command will run faster than mount progress. Checked it in this link

apiVersion: batch/v1beta1

kind: CronJob

metadata:

name: update-db

spec:

schedule: "*/1 * * * *"

jobTemplate:

spec:

template:

spec:

containers:

- name: update-fingerprints

image: python:3.6.2-slim

command: ["/bin/bash"]

args: ["-c", "python /client/test.py"]

volumeMounts:

- name: application-code

mountPath: /where/ever

restartPolicy: OnFailure

volumes:

- name: application-code

persistentVolumeClaim:

claimName: application-code-pv-claimDo Kubernetes Pods Really Get Evicted Due to CPU Pressure?

Reference article: Do Kubernetes Pods Really Get Evicted Due to CPU Pressure?

Tip

Pods are not directly evicted due to high CPU pressure or usage alone. Instead, Kubernetes relies on CPU throttling mechanisms to manage and limit a pod’s CPU usage, ensuring fair resource sharing among pods on the same node.

While high CPU usage by a pod can indirectly contribute to resource pressure and potentially lead to eviction due to memory or other resource shortages, CPU throttling is the primary mechanism used to manage CPU-intensive workloads

# Restart Statefulset workload

Related link

Notice

- Do not removing

statefulsetworkload, it will scale down to 0 and not bring up anymore. Instead of just removing pods, It will help the pods restart base onstatefulsetstrategy - Rollout

statefulsetis not work when status ofstatefulsetiscompleted - Deleting pods in

statefulsetwill not remove associated volume

Note

Deleting the PVC after the pods have terminated might trigger deletion of the backing Persistent Volumes depending on the storage class and reclaim policy. You should never assume ability to access a volume after claim deletion.

Note: Use caution when deleting a PVC, as it may lead to data loss.

- Complete deletion of a

StatefulSet

To delete everything in a StatefulSet, including the associated pods, you can run a series of commands similar to the following*

grace=$(kubectl get pods <stateful-set-pod> --template '{{.spec.terminationGracePeriodSeconds}}')

kubectl delete statefulset -l app.kubernetes.io/name=MyApp

sleep $grace

kubectl delete pvc -l app.kubernetes.io/name=MyAppCreate troubleshoot pods

You can create stateless pods with no deployments for purpose

- Check and validate the networking in node, cluster like DNS resolve, health check

- Restore and Backup DB

- Debug or access service internal

For doing that, you need to use kubectl

- Use

kubectlfor create manifest of pod

k run <name-pod> --image=debian:11.7 --dry-run=client -o yaml > pods.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: <name-pod>

name: <name-pod>

spec:

containers:

- image: debian:11.7

name: <name-pod>

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}-

Customize your pods, for keep alive, you should set command of pod to

tail -f /dev/null -

Run

applycommand with manifest

k apply -f pods.yaml- Wait few second, exec to the pods with command

k exec --tty --stdin pods/xxxx -- /bin/bash- Once you’ve finished testing, you can press Ctrl+D to escape the terminal session in the Pod. Pod will continue running afterwards. You can keep try with command step 4 or delete.

kubectl delete pod xxxxNOTE: Usually, curlimages/curl is regular used. Try to create new pod with fast as possible

kubectl run mycurlpod --image=curlimages/curl -i --tty -- shStop or run the Cronjob with patch

You can see, cronjob is scheduled workload of Kubernetes which trigger on set-time for executing specify job. But sometimes, on during work time, your test job shouldn’t work, therefore you will concert about suspend state of jobs. You can update state with command

k patch -n <namespace> cronjobs.batch <cronjobs-name> -p '{"spec": {"suspend": true}}'Enable again by change true ⇒ false

k patch -n <namespace> cronjobs.batch <cronjobs-name> -p '{"spec": {"suspend": false}}'Furthermore, you can use patch for multiple purpose

- Update a container’s image

- Partially update a node

- Disable a deployment livenessProbe using json patch

- Update a deployment’s replica count

Updating resources

You can handle graceful restart, rollback version with roolout command

# Graceful restart deployments, statefulset and deamonset

k rollout restart -n <namespace> <type-workload>/<name>

# Rollback version

kubectl rollout undo <type-workload>/<name>

kubectl rollout undo <type-workload>/<name> --to-revision=2

# Check the rollout status

kubectl rollout status -w <type-workload>/<name>Kubernetes has some values with help to distinguish service with each others, specify identifying attributes of objects, attach arbitrary non-identifying metadata to objects, …

- Label

- Annotations

And you can update that with kubectl via label and anotation command

# Add a Label

kubectl label pods my-pod new-label=awesome

# Remove a label

kubectl label pods my-pod new-label-

# Overwrite an existing value

kubectl label pods my-pod new-label=new-value --overwrite

# Add an annotation

kubectl annotate pods my-pod icon-url=http://goo.gl/XXBTWq

# Remove annotation

kubectl annotate pods my-pod icon-url- Next, you can update autoscale for deployment by command autoscale

kubectl autoscale deployment foo --min=2 --max=10Edit YAML manifest

kubectl can help you directly change manifest on your shell. If you Linux or macos user, you can use nano or vim to use feature

# Edit the service named docker-registry

kubectl edit svc/docker-registry

# Use an alternative editor

KUBE_EDITOR="nano" kubectl edit svc/docker-registry When you hit to complete button, your workload or resource will change immediately

Delete resource

Use the delete command for executing

# Delete a pod using the type and name specified in pod.json

kubectl delete -f ./pod.json

# Delete a pod with no grace period

kubectl delete pod unwanted --now

kubectl delete pods <pod> --grace-period=0

# Delete pods and services with same names "baz" and "foo"

kubectl delete pod,service baz foo Health check and interact with cluster, node and workload

Use the events command for detect what happen occur on cluster node

# List Events sorted by timestamp

kubectl get events --sort-by=.metadata.creationTimestamp

# List all warning events

kubectl events --types=WarningIf the status of workload are not available or running, you can use describe for verbose check workload

# Describe commands with verbose output

kubectl describe nodes my-node

kubectl describe pods my-podWhen the problem does not come up from workload, you can check log for extract more information

# dump pod logs (stdout)

kubectl logs my-pod

# dump pod logs (stdout) for a previous instantiation of a container. Usually use for crashloopback

kubectl logs my-pod --previous

# dump pod container logs (stdout, multi-container case) for a previous instantiation of a container

kubectl logs my-pod -c my-container --previous

# stream pod logs (stdout)

kubectl logs -f my-pod If you check any situation on workload, especially pods, container without results, you can return to check resources usage on cluster. Before doing that, make sure you install requirements tools for available to use

# Show metrics for all nodes

kubectl top node

# Show metrics for a given node

kubectl top node my-node

# For total overview, you resource-capacity plugin

# print information includes quantity available instead of percentage used

kubectl resource-capacity -a

# print information includes resource utilization, pods in output

kubectl resource-capacity --until -pkubectl can help you disable or manipulation node with command

# Mark my-node as unschedulable

kubectl cordon my-node

# Drain my-node in preparation for maintenance

kubectl drain my-node

# Mark my-node as schedulable

kubectl uncordon my-node Tips

For explore more, you can do lots of things with

kubectl. To read and understand command, you should use manual with--helpflag

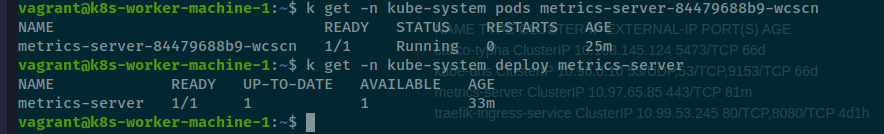

Setup metrics-server

Metrics server will part if you self-hosted your kubernetes, It means you need learn how setup metrics-server , and this quite very easily. Read more about metrics-server at metrics-server

Via kubectl you can applied manifest

kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yamlOr you can use helm to release metrics-server chart at helm

# Add repo to your cluster

helm repo add metrics-server https://kubernetes-sigs.github.io/metrics-server/

# Create the metrics with find the helm-template inside repo

helm upgrade --install metrics-server metrics-server/metrics-serverWarning

Your

metrics-serverwill stuck, because it meet problem to not authenticationtlsinside them withkube-apiserver

But don’t worry about it, you can bypass this via some trick. Read more about solution at

So solution about using edit command of kubectl to edit manifest of deployments kube-server, you can do like this

# First of all, you can configure your editor to nano (Optional), you can't do this step if you prefer vim

export KUBE_EDITOR="nano"

# Use edit to change manifest of deployment

kubectl edit deployments -n kube-system metrics-serverNow scroll to args of container metrics-server, you can change them into

- args:

- --cert-dir=/tmp

- --secure-port=10250

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

- --kubelet-insecure-tls=true # This will help you bypass authenticationAnd now your metrics-server will restart and running after 30s

Learn more about kubernetes metrics, read the article Kubernetes’ Native Metrics and States

Configure Liveness, Readiness and Startup Probes

Kubernetes implement multiple probles type for health check your applications. See more at Liveness, Readiness and Startup Probes

If you want to learn about configuration, use this documentation

Tip

Probes have a number of fields that you can use to more precisely control the behavior of startup, liveness and readiness checks

Liveness

Info

Liveness probes determine when to restart a container. For example, liveness probes could catch a deadlock, when an application is running, but unable to make progress.

If a container fails its liveness probe repeatedly, the kubelet restarts the container.

You can set up liveness probe with command configuration

apiVersion: v1

kind: Pod

metadata:

labels:

test: liveness

name: liveness-exec

spec:

containers:

- name: liveness

image: registry.k8s.io/busybox

args:

- /bin/sh

- -c

- touch /tmp/healthy; sleep 30; rm -f /tmp/healthy; sleep 600

livenessProbe:

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 5

periodSeconds: 5Or use can use liveness probe with HTTP request configuration

spec:

containers:

livenessProbe:

httpGet:

path: /healthz

port: 8080

httpHeaders:

- name: Custom-Header

value: Awesome

initialDelaySeconds: 3

periodSeconds: 3You can use another protocol with liveness, such as

Readiness

Info

Readiness probes determine when a container is ready to start accepting traffic. This is useful when waiting for an application to perform time-consuming initial tasks, such as establishing network connections, loading files, and warming caches.

If the readiness probe returns a failed state, Kubernetes removes the pod from all matching service endpoints.

You can try configure readiness proble with

readinessProbe:

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 5

periodSeconds: 5Configuration for HTTP and TCP readiness probes also remains identical to liveness probes.

Info

Readiness and liveness probes can be used in parallel for the same container. Using both can ensure that traffic does not reach a container that is not ready for it, and that containers are restarted when they fail.

Note

Readiness probes runs on the container during its whole lifecycle.

Startup

Info

A startup probe verifies whether the application within a container is started. This can be used to adopt liveness checks on slow starting containers, avoiding them getting killed by the kubelet before they are up and running.

If such a probe is configured, it disables liveness and readiness checks until it succeeds.

You can configure for you pod with configuration

livenessProbe:

httpGet:

path: /healthz

port: liveness-port

failureThreshold: 1

periodSeconds: 10And mostly startup for helping Kubernetes to protect slow starting containers

Note

This type of probe is only executed at startup, unlike readiness probes, which are run periodically

Setup SnapShotter for Elasticsearch

Following this documentation about snapshot with elasticsearch for Azure Cloud, explore at # Elastic Cloud on Kubernetes (ECK) Quickstart with Azure Kubernetes Service,Istio and Azure Repository plugin

You can use terraform with manifest to apply this configuration

# https://www.linkedin.com/pulse/elastic-cloud-kubernetes-eck-quickstart-azure-repository-ajay-singh/

resource "kubernetes_secret" "azure_snapshot_secret" {

metadata {

name = "azure-snapshot-secret"

namespace = var.namespace

}

binary_data = {

"azure.client.default.account" = base64encode(var.remote_state.backup_storage_account_name)

"azure.client.default.key" = base64encode(var.remote_state.backup_storage_account_key)

}

depends_on = [

helm_release.elastic_operator

]

}

# Register the Azure snapshot with the Elasticsearch cluster

resource "kubectl_manifest" "elasticsearch_register_snapshot" {

yaml_body = <<YAML

apiVersion: batch/v1

kind: Job

metadata:

name: ${var.name}-register-snapshot

namespace: ${var.namespace}

spec:

template:

spec:

containers:

- name: register-snapshot

image: curlimages/curl:latest

volumeMounts:

- name: es-basic-auth

mountPath: /mnt/elastic/es-basic-auth

command:

- /bin/sh

args:

# - -x # Can be used to debug the command, but don't use it in production as it will leak secrets.

- -c

- |

curl -s -i -k -u "elastic:$(cat /mnt/elastic/es-basic-auth/elastic)" -X PUT \

'https://${var.name}-es-http:9200/_snapshot/azure' \

--header 'Content-Type: application/json' \

--data-raw '{

"type": "azure",

"settings": {

"client": "default"

}

}' | tee /dev/stderr | grep "200 OK"

restartPolicy: Never

volumes:

- name: es-basic-auth

secret:

secretName: ${var.name}-es-elastic-user

YAML

depends_on = [kubectl_manifest.elasticsearch]

}

# Create the snapshotter cronjob.

resource "kubectl_manifest" "elasticsearch_snapshotter" {

yaml_body = <<YAML

apiVersion: batch/v1

kind: CronJob

metadata:

name: ${var.name}-snapshotter

namespace: ${var.namespace}

spec:

schedule: "0 16 * * 0"

concurrencyPolicy: Forbid

jobTemplate:

spec:

template:

spec:

nodeSelector:

pool: infrapool

containers:

- name: snapshotter

image: curlimages/curl:latest

volumeMounts:

- name: es-basic-auth

mountPath: /mnt/elastic/es-basic-auth

command:

- /bin/sh

args:

- -c

- 'curl -s -i -k -u "elastic:$(cat /mnt/elastic/es-basic-auth/elastic)" -XPUT "https://${var.name}-es-http:9200/_snapshot/azure/%3Csnapshot-%7Bnow%7Byyyy-MM-dd%7D%7D%3E" | tee /dev/stderr | grep "200 OK"'

restartPolicy: OnFailure

volumes:

- name: es-basic-auth

secret:

secretName: ${var.name}-es-elastic-user

YAML

depends_on = [kubectl_manifest.elasticsearch_register_snapshot]

}

resource "kubectl_manifest" "elastic_cleanup_snapshots" {

yaml_body = <<YAML

apiVersion: batch/v1

kind: CronJob

metadata:

name: ${var.name}-cleanup-snapshotter

namespace: ${var.namespace}

spec:

schedule: "@daily"

ttlSecondsAfterFinished: 86400

backoffLimit: 3

concurrencyPolicy: Forbid

jobTemplate:

spec:

template:

spec:

nodeSelector:

pool: infrapool

containers:

- name: clean-snapshotter

image: debian:11.7

imagePullPolicy: IfNotPresent

volumeMounts:

- name: es-basic-auth

mountPath: /mnt/elastic/es-basic-auth

command:

- /bin/sh

args:

- -c

- |

# Update and install curl package

apt update && apt install -y curl

# Get the date base on decision which mark to deleting

deletionDate=$(date -d "$date -${var.retention_date} days" +%Y-%m-%d)

# Get list elasticsearch snapshot with including in deletion date

listElasticSnapshots=$(curl --insecure -X GET "https://elastic:$(cat /mnt/elastic/es-basic-auth/elastic)@${var.name}-es-http:9200/_cat/snapshots/azure" | awk '{print $1}' | grep -e "$deletionDate")

# Check if list snapshots are null or not

if [ "$listElasticSnapshots" = "" ]; then

# Ignore deleted snapshots if no snapshots available

echo "Not existing your deletion date"

exit 0

else

# For remove only or multiple snapshot in deletion date

for snapshot in $listElasticSnapshots;

do

res=$(curl -X DELETE --insecure "https://elastic:$(cat /mnt/elastic/es-basic-auth/elastic)@${var.name}-es-http:9200/_snapshot/azure/$snapshot" 2> /dev/null || echo "false")

if [ "$res" != "false" ]; then

echo "Deleted $snapshot"

else

echo "Failed to delete $snapshot"

fi

done

fi

restartPolicy: OnFailure

volumes:

- name: es-basic-auth

secret:

secretName: ${var.name}-es-elastic-user

YAML

depends_on = [kubectl_manifest.elasticsearch_register_snapshot]

}