Architecture

1. Reddit - RTX 5090 Training Issues - PyTorch Doesn’t Support Blackwell Architecture Yet?

- This article discusses a common issue encountered when training with PyTorch on an RTX 5090. Having personally faced and resolved this problem, I can confirm that the solutions provided in this thread are precisely what you need.

- You’ll learn how to set up PyTorch to match the functionalities of your CUDA driver version.

2. Medium - The New AI Infrastructure Stack

3. Medium - How TCO, Specialization, and Governance Are Forging the Next AI Infrastructure Stack

- This article delves into the latest and most sophisticated developments in AI infrastructure, a particularly hot topic in recent months. If you’re looking to understand cutting-edge advancements, this piece is for you. While it offers high-level knowledge and contains numerous figures, it enables you to dive deeper into AI infrastructure. From my perspective, this knowledge can unlock new avenues for optimizing GPU resources, reducing costs, and building stable environments for AI production.

- You will encounter new and advanced AI techniques such as Specialized Silicon (ASICs), Memory Disaggregation (CXL), Optical Interconnects (Photonics), Total Cost of Ownership (TCO), and more.

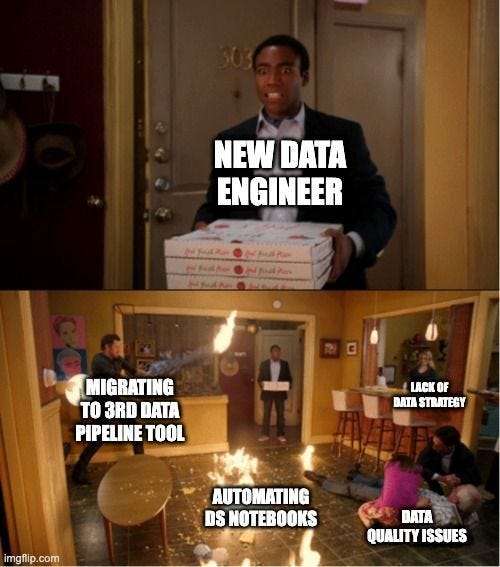

Data Engineer

1. Medium - Kafka Streams vs. Flink vs. Spark: The Brutally Honest Comparison

- This article offers a concise comparison of the “big three” in streaming processing. It summarizes the advantages and disadvantages of each technology, providing use cases to help you make informed decisions for your production environment.

- The overview is designed to guide your choices and is particularly useful if you’re considering or planning to work with these technologies. However, always remember to select tools based on your specific purpose, rather than simply following trends or hype.

2. Doris - Empowering cyber security by enabling 7 times faster log analysis 🌟 (Recommended)

- This article demonstrates how an organization tackles the challenges of processing and transferring data from their scanning workflow to ensure the cybersecurity of their customers. Through this case study, we will learn their strategies for optimizing and resolving two critical problems: slow data writing and slow query execution.

- They achieved significant improvements in speed and cost by upgrading their old infrastructure with Apache Doris (https://doris.apache.org/), leveraging its inverted index and NGram Bloom Filter capabilities.

- You can find more detailed information, including specific queries and performance numbers, within the post, which you can use to inspire new ideas for your own production environment.

3. Medium - In Git We Trust: Git for Data over Data Lakes 🌟 (Recommended)

- This article emphasizes Git’s pivotal role in our industry, detailing how to build a solid strategy and discipline for secure code development. However, it then shifts focus to Git’s application in data workflows. The author provides insights into a seemingly simple process that, if not managed, could quickly escalate into significant budget losses when dealing with heavy data loads.

- To address this, they propose using Git for Data. They aim to streamline the process of transferring data from traditional table formats to Git by incorporating ACID transactions, schema changes, and table snapshots to build version control for their data. This approach offers numerous benefits for data lakes, including secure development, enhanced collaboration, and “Time Travel” capabilities (much like Git Reset 😄).

- For greater clarity, the author presents a use case and explains how their company implements this solution. You’ll find practical examples, illustrations to aid visualization, and numerous code snippets demonstrating their implementation with Bauplan—a Pythonic data platform designed for transformation and AI workloads with built-in Git-for-data capabilities.

4. Medium - Data Engineer Toolkit in 2025:Must‑Have Skills, Tools & Resources

- This article serves as a fundamental guide for your data engineering journey, introducing essential toolkits for building data pipelines, recommending databases, and outlining key techniques to add to your skillset.

5. Medium - Data Ingestion — Part 1: Architectural Patterns 🌟 (Recommended)

- This is the first in a two-part series focusing on data ingestion and the crucial techniques for bringing diverse data sources into your data warehouse or analytics platform. In this initial article, the author will present various data ingestion patterns, including traditional methods like unified, data virtualization, ETL, and ELT, as well as newer techniques such as Push/Pull and Streaming.

- While many architectures exist, choosing wisely is essential for a strong start. You have countless technologies available, and selecting the most suitable one for your process is critical to prevent future tech debt—a truly important decision whose answer, hopefully, you’ll find after reading this article. 🤔

6. Medium - Data Ingestion — Part 2: Tool Selection Strategy 🌟 (Recommended)

- This is the sequel to the previous blog post. Now that you’ve chosen your architecture, it’s time to select the tech stack for ramping up your data pipeline. This decision involves numerous criteria, prompting several questions that need to be answered.

- Once you have those answers, you can determine which tools to adopt for your stack to handle data ingestion, covering methods like Batch Loading, Change Data Capture (CDC), Connector-Based Tools, and Code-Driven Data Ingestion. You’ll also need a clear strategy for which approach truly suits your needs. Read on, and then set up a Proof of Concept (POC) to validate your concept.

7. Medium - Data Ingestion: 7 Challenges and 4 Best Practices

- This article outlines the challenges and tactics for your data ingestion strategies, highlighting their critical importance in data pipelines. It specifically addresses two popular solutions: streaming and batch processing.

- The article details seven common problems associated with data ingestion, covering a wide range from time constraints and schema complexity to architectural issues, data duplication, and compliance concerns. Since data is the backbone—a crucial element of any production system—there are many aspects to consider. To enhance your pipeline’s quality, the article provides several best practices, including implementing SLAs (Service Level Agreements), Decoupling, Quality Checks, and Automation.

8. Medium - Data Partitioning: Slice Smart, Sleep Better 🌟 (Recommended)

- This article presents a fun and interesting take on slicing your data into smaller pieces, a crucial technique that makes a significant difference when working with petabytes of data. By adopting partitioning for your data, you’ll experience faster, smarter, and better value extraction, while significantly decreasing pain points.

- We’ll walk through each partitioning strategy, including Vertical, Horizontal, Sharding, and Functional, learning from real use cases to understand when and in what situations each should be applied.

- Finally, you’ll learn about a major challenge in partitioning: Data Skew (https://www.secoda.co/glossary/data-skew), which leads to unbalanced data. The article explains how to identify and address this problem, outlining the necessary actions to tackle it effectively. You can find more details within the article.

Kubernetes

1. Medium - Are You Really a Kubernetes Expert? Take This 10-Question Challenge to Find Out

- This article presents genuinely fun and insightful questions to deepen your understanding of Kubernetes; I certainly learned a lot from them. It summarizes key questions you should tackle hands-on in a lab, guiding you toward deeper knowledge exploration.

- This list effectively gauges your Kubernetes experience, and I believe it’s accurate because it aligns with real-world use cases. You should continue to learn from these challenges—for interviews, for gaining knowledge, and for your overall Kubernetes adventure.